The browser as an engine for prototyping connected objects

What if prototyping a connected object was like making a web page?

Prototyping connected objects usually requires specialised and low-level programming skills making them challenging and time consuming to prototype. Platforms like Arduino make this more accessible, but you still need to learn the Arduino programming language.

Connected objects are by definition networked and increasingly have sophisticated user interfaces, read their environment with sensors and interact with web, app and server components whilst maintaining their own application logic.

When we develop connected object prototypes we want to:

- Test them with users: so they should be functional and demonstrate real behaviours

- Demo them to others and stakeholders: so they should allow repeatable booting over time and be robust

- Explore and sketch out an idea: so they should be quick to develop and debug, reusing code found elsewhere

We want a system that enables ease of development in a desktop environment, allowing developers to re-use familiar tools and code and then to deploy them to devices in a repeatable way, confident that it will work in the same way.

There's already a highly capable and standardised run-time environment with excellent developer tooling and vast reference materials and libraries of existing code available on most computers—the web browser. I think it's an ideal platform for prototyping these experiences.

When describing a browser-based platform, it's important to distinguish between prototyping and production.

Attempts have been made to utilise web technologies (HTML, CSS, JavaScript) and the browser as a production run-time platform. Hybrid Broadcast Broadband TV (HbbTV) standardises a stripped-down HTML profile for interactive television apps. Sony's Playstation 4 utilised a WebKit-based browser environment and WebGL for parts of the user interface. However, I'm specifically excluding production uses in this description.

For prototyping, the browser environment offers a vast set of capabilities that are directly applicable to the kinds of experiences we want to create:

- network access and support for HTTP and realtime technologies

- media playback support, sound creation

- access to connected peripherals such as camera and microphone access, MIDI devices, bluetooth and even USB

- a fully-feature programming language (JavaScript)

- incredible developer-support via Web Inspector offering, run-time debugging, editing, profiling and network inspection

- near-native performance using WebAssembly

- a security sandbox that isolates code running from malicious operations

Many of these technologies are standardised through the W3C so browsers from multiple vendors can run the same applications. However, some of the device-access technologies such as WebMIDI, WebBluetooth and WebUSB are only support by Google Chrome's browser.

A runtime developed predominantly for graphical user interfaces poses challenges, most of them stemming from the security sandbox:

- the "same origin policy" is stricter than other runtime environments that do not impose any restrictions on which domains can be accessed for fetching data

- the application lifecycle generally begins when a page is loaded and ends when the page is closed: this needs to be mapped onto the device lifecycle (power-on to power-off)

- web applications are generally run on the client side but require an HTTP server to deliver them to the browser initially

- network access is required to load the page, although once loaded, Service Workers offer a mechanism for enabling the application to work completely offline

- communication is client to server for HTTP requests and WebSockets, although WebRTC offers a data channel that is peer-to-peer

- increasingly, privileged actions (e.g. audio playback, notifications) must be requested through an explicit user gesture such as a click or touch. And some (e.g. geolocation, notifications) trigger a browser user interface dialog that requests user permission.

Case Study: Neue Radio

Neue Radio is an experimental framework of this approach, developed as part of the BBC's Better Radio Experiences project. It applies this technical architecture to prototyping interesting radio and audio experiences. It builds on a body of work developed by BBC Research and Development. Previous projects such as Radiodan and Tellybox found that people react more viscerally to physical prototypes in boxes.

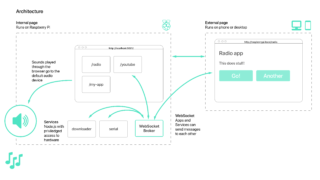

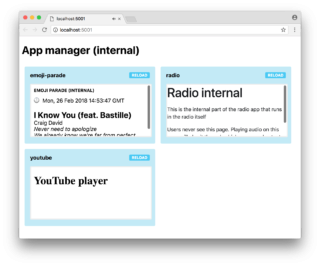

The stack uses Chromium, Google's open source version of Chrome, running on a Raspberry Pi 3. Each prototype is created as an app. Apps are discrete web pages that provide a slice of functionality. For example, a particular radio experience or interesting user interface.

Apps are split into two parts, with their entry points being an HTML page that is loaded. The internal part runs on the device—in this case a Raspberry Pi. Optionally, an external part is loaded can run remote control web apps to be accessed by the user's web browser.

This split is difficult to communicate, as it's unusual for a web app that doesn't run close to the user, on their device.

A Node.js web server runs on the device and serves the locally-installed apps, allowing them to work offline or on networks without internet access. In addition, an app can be loaded by specifying an URL for it's internal and external pages.

For those situations where privileged access is required and a browser API does not provide the required functionality—like accessing connected sensors—"Services" can be run that provide that general functionality.

Apps and Services communicate through a simple shared WebSocket broker that broadcasts messages to all connected clients.

A Manager Service runs as a tab on the headless web browser and is responsible for loading all the apps' internal pages as iframes and managing their lifecycle. Reloading an app is as simple as reloading the iframe.

Choosing JavaScript for the WebSocket server, web-based manager and the Apps and Services reduces to cognitive overhead of working on different parts of the system and encourages code reuse, for example a shared WebSocket library encoding a protocol for communication and supporting useful patterns like re-connection. As WebSockets are reified as the system communication mechanism, Services can in fact be written in any language. Apps can be written in any language that can be compiled into JavaScript and run in the browser.

Chromium contains a remote debugging protocol which allows a Web Inspector running on a developer's own computer to connect to the headless browser running on the Raspberry Pi. This is an extremely powerful development tool as it enables the full inspection of the runtime environment (such as local storage and media playback), debugging of live running code. Crucially, the Inspector allows not just observation, but also the editing of Apps and running of new code which enables a much more iterative style of development.

Find out more about Neue Radio on GitHub.

The project team were: Libby Miller, Dan Nuttall and Kate Towsey. The prototypes were developed by members of BBC R&D and Richard Sewell.

Photographs by Joanna Rusznica and David Man.

Thanks to Libby Miller for reviewing a draft of this post.